When AI first stepped onto the scene, it was like a scoop of vanilla — bland, basic, and, frankly, more of a novelty than a tool. Fast forward to today, and we’re way past “once upon a time.” These models aren’t just writing bedtime stories anymore; they’re writing code, debugging apps, and helping developers ship projects faster than ever.

But the thing is, not all AI is created equal. And if you’ve ever relied on clean, bug-free code (or if you’re like me — someone who casually dabbles in dev tools and needs things to just play around with), you’ll know this isn’t about which model is trending on X. It’s about which one actually helps you get things done.

If you read my previous article where I compared Claude and ChatGPT as general-purpose AI assistants, you already know I’m obsessed with putting these tools through their paces. This time, though, I narrowed the lens.

I gave Claude and ChatGPT the same 10 real-world programming prompts, covering everything from writing scripts to explaining code and solving bugs. My goal is to see which one truly deserves a spot in your coding workflow.

In this no-fluff comparison, I’ll walk you through how each model handles code generation, debugging, documentation, and more. I’ll highlight where each AI nailed it and where it didn’t quite get it.

And just to be clear, I’m not a pro developer. I’m an enthusiast who loves testing smart tools. So, if you’re curious about which AI can help you code smarter, not harder, let’s dig into it.

TL;DR: Key takeaways from this article

- Claude and ChatGPT are game-changers for coding, helping developers generate code, debug, and document faster.

- Claude focuses on safety, context-aware reasoning, and deep summarization, making it great for complex, logic-heavy tasks.

- ChatGPT is versatile and excels in various coding tasks, from code generation to real-time research, thanks to its internet access.

- Performance tests across 10 coding tasks show that both models handle coding accurately and well, with ChatGPT leading in clarity and Claude in debugging and documentation.

- Choosing the right AI model depends on your needs, as Claude and ChatGPT both have their strengths.

Overview of Claude and ChatGPT

Before we get into how each AI performs under pressure, let me quickly introduce the two heavyweights I tested: Claude and ChatGPT.

Claude

What if Claude?

Claude isn’t just another clever AI name; it’s a nod to Claude Shannon, the father of information theory. And honestly, that’s fitting because this model feels like it was built by someone who cares about doing things right.

Developed by Anthropic, Claude is designed to be conversational, careful, and context-savvy. One of the big selling points? It doesn’t hallucinate as much. That means when it writes or explains code, it’s less likely to just make stuff up, which, if you’ve ever been burned by a buggy AI suggestion, is a huge win.

Join 30,000 other smart people like you

Join 30,000 other smart people like you

Get our fun 5-minute roundup of happenings in African and global tech, directly in your inbox every weekday, hours before everyone else.

Claude’s strength lies in its ability to hold a very human-like conversation while also reasoning through complex prompts. It doesn’t pull in live internet data like some tools do. Instead, it leans fully on its (very large) training brain. It also boasts a massive context window (up to 75,000 words), so if you want to feed it an entire codebase or technical document, Claude won’t blink. It’s built for deep analysis, long-form logic, and thoughtful, structured output.

It’s kind of like pairing up with that one team member who quietly gets everything done and triple-checks it all, just to be safe.

How does Claude work?

Claude operates like a quiet genius in the background, trained on oceans of data, armed with serious reasoning chops, and unbothered by the chaos of the internet. Unlike some models that tap into live data on the fly, Claude is self-contained. What it knows, it really knows. And what it doesn’t know, it won’t pretend.

When I tested it, I found Claude excels in generating clean, structured output, whether it’s summarizing a dense technical doc, analyzing patterns, or writing code with thoughtful context. It’s built to be more than just helpful; it’s methodical, reliable, and strangely introspective for a bot.

You can access it via browser or on your phone (both iOS and Android), which makes it handy for professionals who want serious insights on the go. The trade-off is zero live internet access. So, if you ask it for current events or trending GitHub repos, it’ll give you the “Sorry, I can’t do that.” But for most coding and reasoning tasks, it more than holds its own.

Claude at a glance:

| Developer | Anthropic |

| Year launched | 2023 |

| Type of AI tool | Conversational AI and Large Language Model (LLM) |

| Top 3 use cases | Content structuring, analytical reasoning, and summarization |

| Who it’s for | Writers, researchers, developers, and business professionals |

| Starting price | $20/month |

| Free version | Yes (with limitations) |

ChatGPT

What is ChatGPT?

ChatGPT kind of is the Beyoncé of AI. It’s everywhere, it’s wildly talented, and somehow, it keeps reinventing itself. I’ve lost count of how many times it’s saved me from a blank screen or helped untangle a messy script. And if you’ve been even remotely interested in AI lately, chances are you’ve already tried it or heard someone say, “Why don’t you just ask ChatGPT?”

Backed by OpenAI and powered by the GPT-4o model, ChatGPT isn’t just about chatting. It’s a multi-tool for your brain. Whether you’re writing code, fixing bugs, whipping up documentation, or brainstorming product ideas, it responds fast and often, frighteningly well.

What makes it shine for developers is the blend of fluency and functionality. You can ask it to explain a concept like you’re five, then immediately pivot to generating a full-stack CRUD app. Bonus points if it plays well with plug-ins and APIs, which means it’s not just giving you answers; it can take action, too.

From coding companions to productivity hacks, ChatGPT has become the go-to co-pilot for developers, writers, researchers, and pretty much anyone trying to work smarter, not harder.

How does ChatGPT work?

ChatGPT isn’t just repeating patterns like a glorified autocomplete. It runs on a beast of a language model (GPT-4o, if you’re fancy) that uses deep learning and reinforcement learning from human feedback (RLHF) to generate scarily good responses. It’s constantly refining its understanding of context and intent, not just the words you type but what you mean behind them.

Earlier versions struggled a bit with long conversations (you know, like when you asked it a question in part one and it forgot by part three). But newer models like GPT-4 Turbo now handle memory better and respond faster, all while being more accurate with complex prompts. That means fewer hallucinations and more nuanced responses.

One of the big advantages of ChatGPT is real-time web access. Unlike Claude, ChatGPT can tap into the internet for live updates. That makes it gold for anyone doing SEO, research, or trying to write content that isn’t trapped in 2023. It can even browse sources and cite them if you ask nicely.

And yes, you can use it just about anywhere: on desktop, mobile, and even inside other apps, thanks to its API integrations. Whether you’re a startup founder, solo coder, content creator, or just someone who hates starting from a blank screen, ChatGPT feels like having an extra brain on call 24/7.

ChatGPT at a glance

| Year launched | 2022 |

| Type of AI tool | Generative AI for natural language processing |

| Top 3 use cases | Content creation, idea generation, SEO recommendations |

| Who can use it? | Marketers, content creators, bloggers, SEO professionals |

| Starting price | $20/month (ChatGPT Plus) |

| Free version | Yes, with limited access to GPT-3.5 |

Both Claude and ChatGPT bring serious power to the table, but I didn’t want to stop at feature lists and marketing promises. I wanted to see how they perform when it’s time to write clean code, fix bugs, and help you move faster as a developer (or a curious enthusiast like me).

So, I put them to the test with 10 real coding tasks that any developer might run into.

How I evaluated Claude and ChatGPT’s coding skills

I didn’t just toss them a bunch of random stuff and call it a day. No. I gave Claude and ChatGPT a full-on developer workout. Ten real-world coding tasks. No fluff. Just practical challenges that you or I might face when working on a project, trying to squash bugs, or documenting our work for others.

Here’s what I asked them to do:

- Code generation: From building a REST API to cranking out classic sorting algorithms.

- Bug fixing: I fed them broken code and watched to see if they could sniff out the problem and patch it up.

- Writing documentation: Think docstrings, README files, and clear instructions that don’t make you want to cry.

- Logic-based problems: The brainy stuff: recursion, dynamic programming, and algorithms that test reasoning, not just memorized patterns.

Each model got the same prompts: no handholding, no extra hints. I ran everything in a controlled coding environment to keep things fair.

And here’s how I judged their performance:

- Accuracy: Does the code run, or does it throw tantrums (read: errors)?

- Clarity: Is the code clean, readable, and something I’d be okay handing off to another dev?

- Efficiency: Are we looking at elegant solutions or bloated messes?

- Debugging skill: Can it spot the issue and explain what went wrong?

- Explanations: I don’t just want answers. I want to learn. Did the model walk me through the logic behind its decisions?

This wasn’t a speed test or a popularity contest. It was about practicality. If you’re planning to rely on an AI model to help you code, you want one that doesn’t just sound smart but actually is smart where it counts. Let’s get into the results.

Prompt-by-prompt analysis

Let’s break it down with real examples.

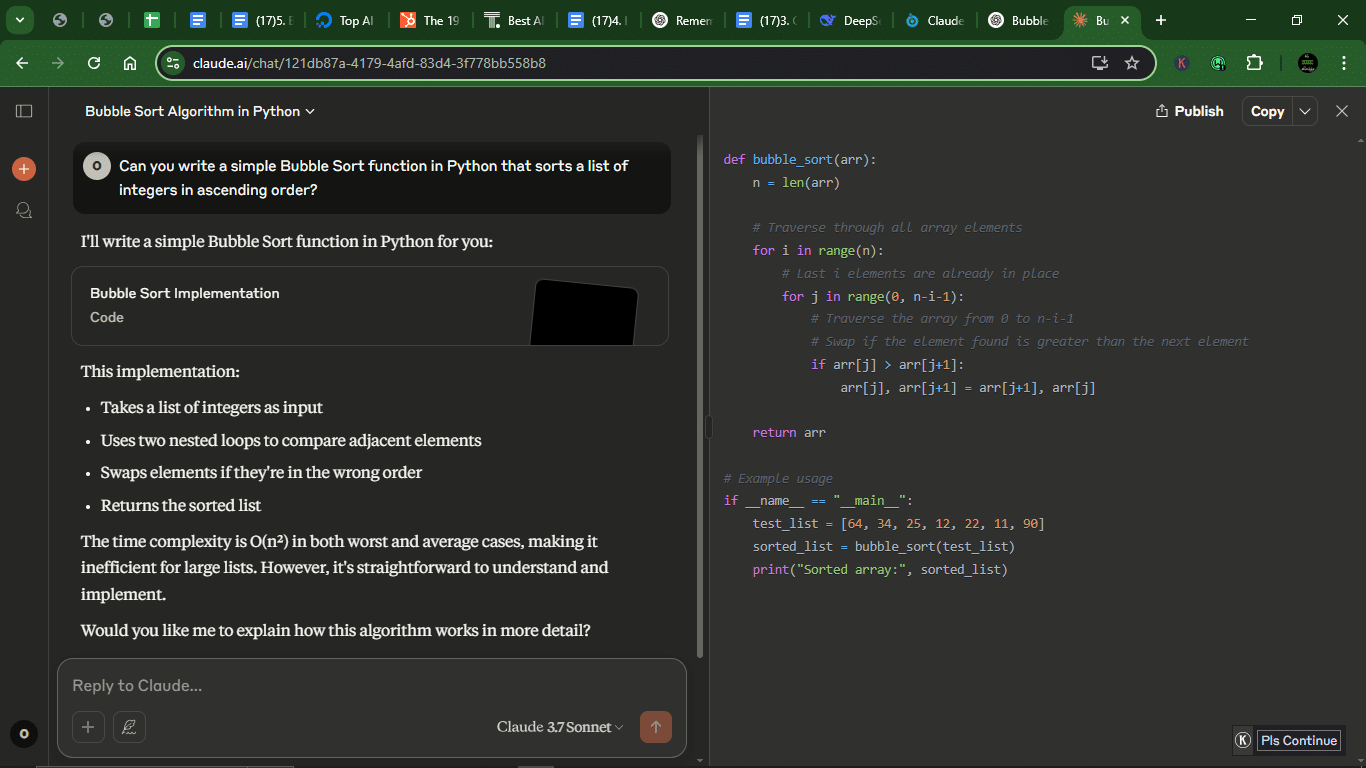

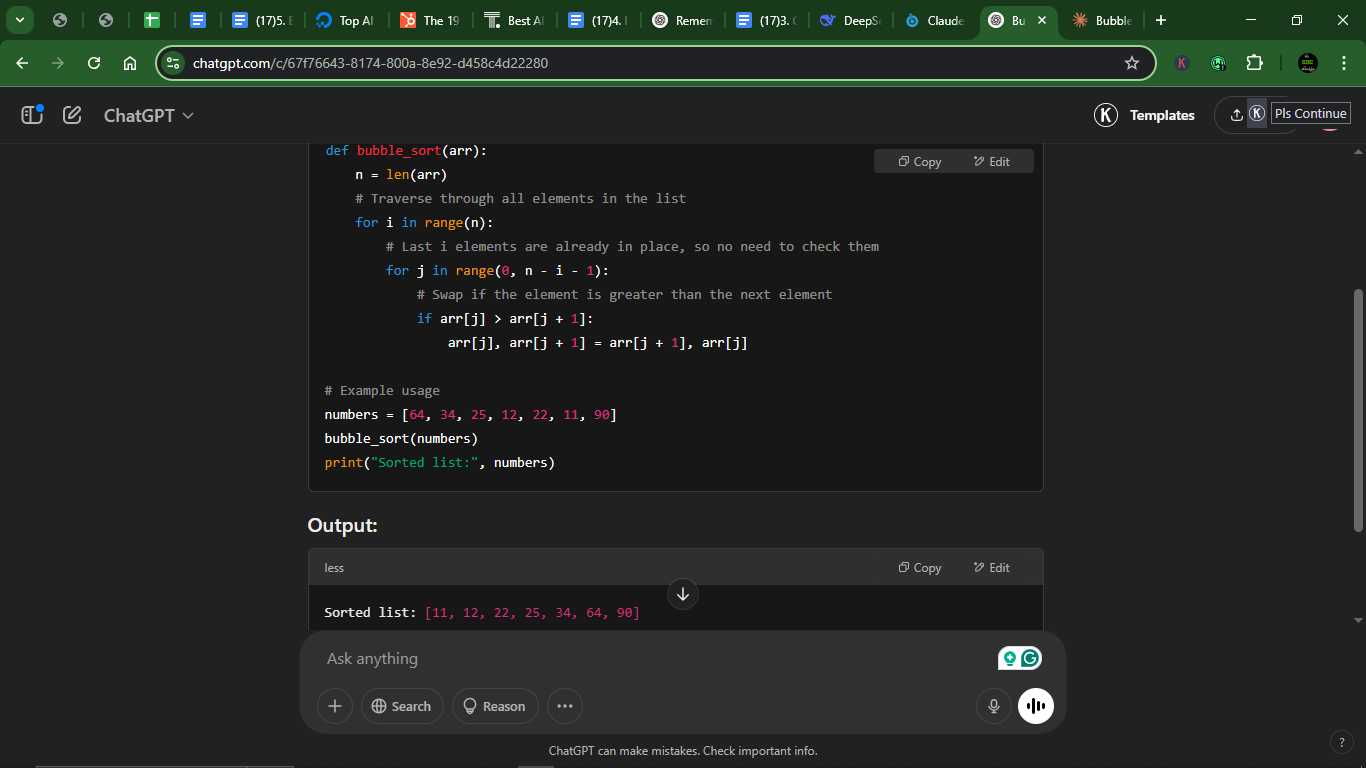

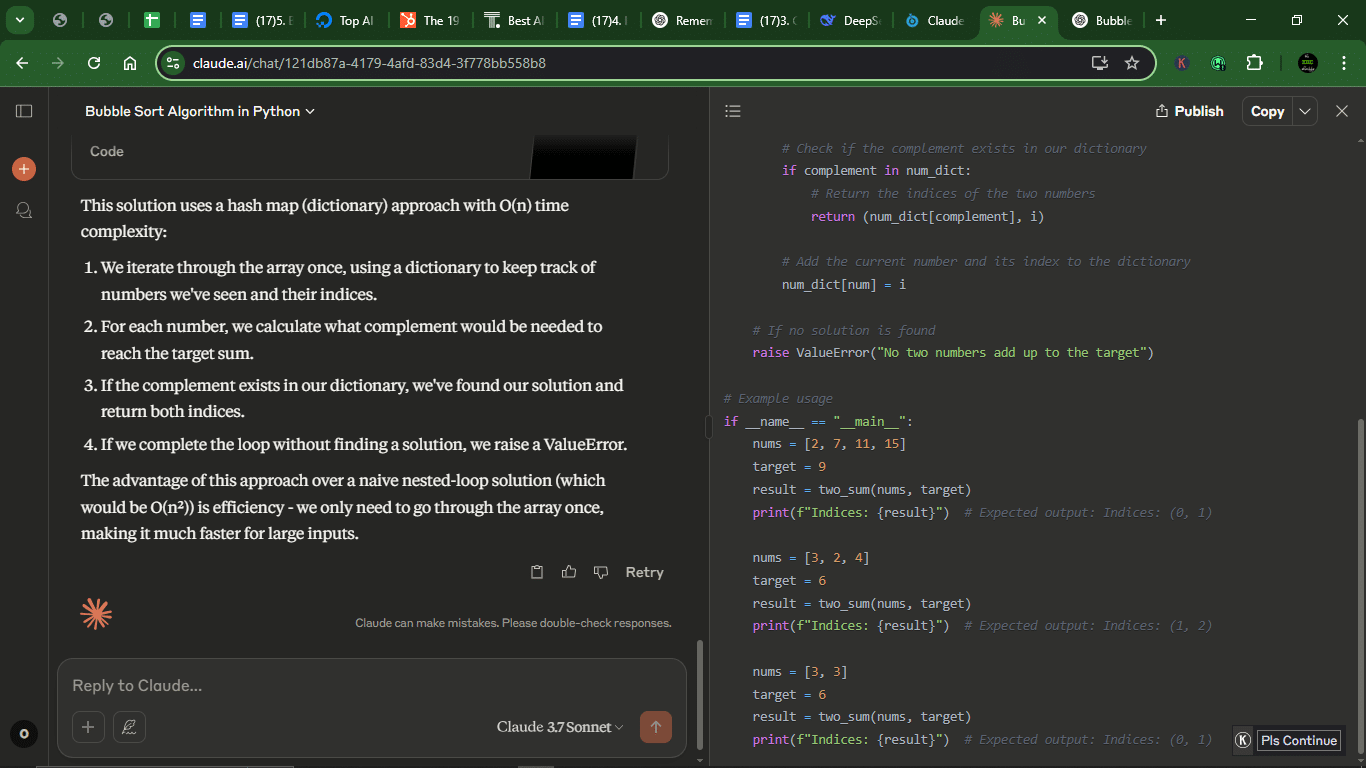

Prompt 1: Write a Bubble Sort Algorithm in Python

I kicked things off with the classic Bubble Sort. You know, the algorithm everyone learns but no one uses. I just wanted clean code, nothing extra.

Prompt: “Can you write a simple Bubble Sort function in Python that sorts a list of integers in ascending order?”

Result

Claude:

ChatGPT:

1. Accuracy: Both implementations correctly perform a Bubble Sort and will sort the list in ascending order without errors.

2. Clarity:

- Claude:

- Uses return arr, making it explicit that the function returns the sorted list.

- Includes a if __name__ == “__main__”: block, which is good practice for script-like execution.

- Comments are slightly more verbose but helpful.

- ChatGPT:

- Modifies the list in place (no return statement), which is fine but less explicit.

- Uses a more straightforward example usage block.

- Comments are concise but equally clear.

3. Efficiency: Both use the standard Bubble Sort algorithm with O(n²) time complexity. Neither includes early termination (optimization for nearly sorted lists).

4. Explanations: Neither provided additional educational explanations beyond the code comments.

Winner: Claude. Claude wins slightly due to better clarity, explicitness, and Python best practices. ChatGPT’s version is functionally identical but slightly less explicit.

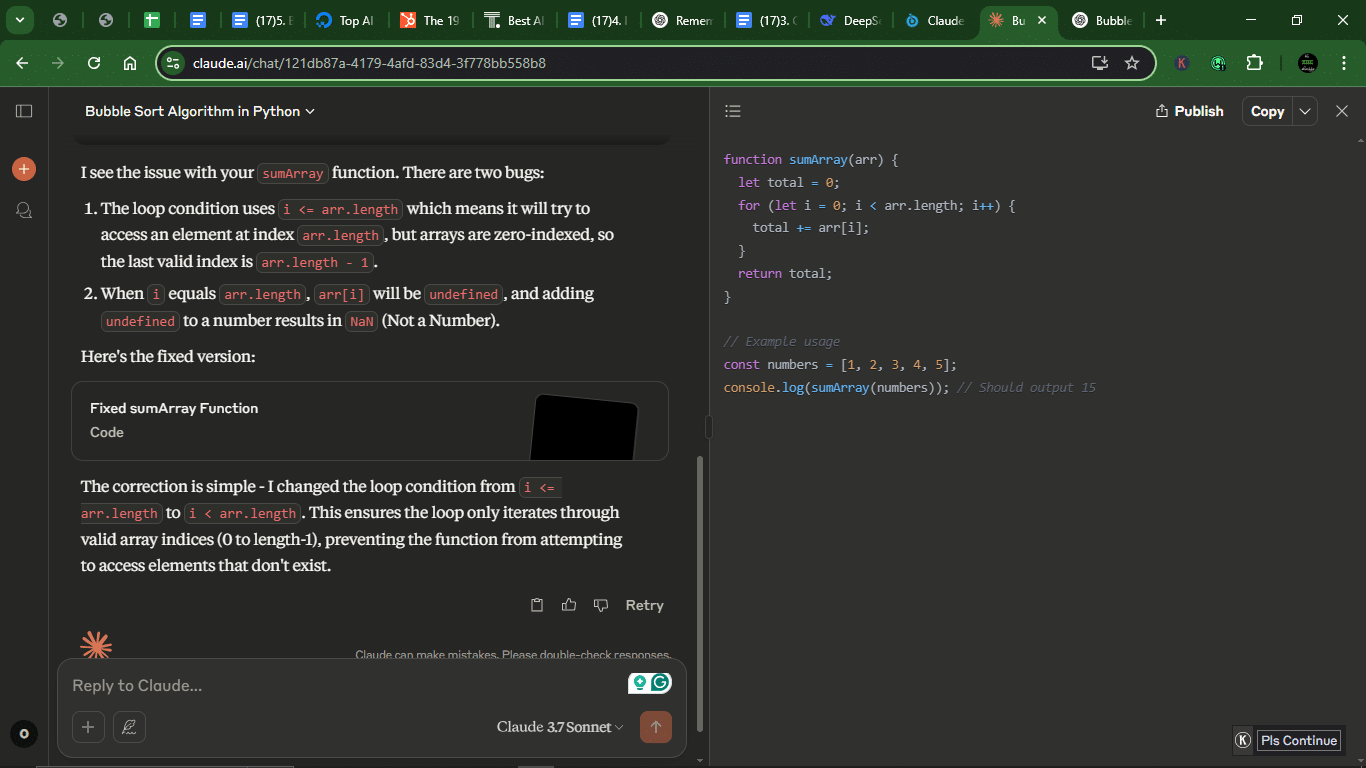

Prompt 2: Fix the bug in this JavaScript function

The function had an off-by-one error in a for-loop.

Prompt: Here’s a JavaScript function that should return the sum of elements in an array, but it’s giving the wrong result. Can you fix it?

“function sumArray(arr) {

let total = 0;

for (let i = 0; i <= arr.length; i++) {

total += arr[i];

}

return total;

}”

Result

Claude:

ChatGPT:

1. Accuracy: Both correctly fix the off-by-one error (i <= arr.length → i < arr.length). And both versions now correctly sum the array.

2. Clarity:

- Claude:

- Explains the two bugs (out-of-bounds access and NaN issue) clearly.

- Provides a fixed function with an example.

- Technical but thorough explanation.

- ChatGPT:

- Explains the issue in a friendlier, more conversational tone (“sneaky bug”).

- Offers two solutions: the fixed loop and a reduce() alternative.

- More concise but equally clear.

3. Efficiency: Both fixes use O(n) time complexity (standard for array summation). ChatGPT’s reduce() version is functionally identical but more idiomatic.

4. Debugging skill: Both correctly identify the issue (<= vs <). Claude explains why NaN happens (adding undefined). ChatGPT also explains the issue well and offers an alternative.

5. Explanations: Claude gives a detailed explanation of the bug but offers no extra optimizations or alternatives. ChatGPT also explains the bug clearly and conversationally, but provides a second, cleaner solution (reduce). In addition, ChatGPT asks if edge cases should be handled, which shows deeper thinking.

Winner: ChatGPT. ChatGPT wins this round due to a nuanced explanation, offering an alternative (better) solution, and proactively considering edge cases.

Prompt 3: Build a Flask API with one GET and one POST route

I asked for a basic Flask API with a GET and POST route. Bonus points if they added input validation or didn’t forget about JSON errors.

Prompt: Write a basic Flask API in Python with one GET route (that returns a welcome message) and one POST route that accepts JSON input and returns a custom response.

Result

1. Accuracy: Both implementations correctly set up a Flask API with A GET route (/) returning a welcome message and A POST route (/process or /greet) handling JSON input.

2. Clarity:

- Claude:

- More verbose (extra comments, checks for JSON, explicit error handling).

- Returns structured responses (status, message, received_data).

- Includes detailed setup instructions (curl example).

- ChatGPT:

- Cleaner, more concise (minimalist but functional).

- Uses data.get(‘name’, ‘Stranger’) for default value handling.

- No explicit error checks (assumes JSON input).

3. Efficiency: Both use Flask’s default routing (no performance difference). Claude does extra validation (checks is_json, requires name field), while ChatGPT skips validation but handles missing names gracefully.

4. Explanations: Claude explains each step (JSON check, error responses) and provides detailed usage examples (curl command). ChatGPT is less verbose but includes example requests/responses and uses default values (‘Stranger’) for better UX.

Winner: Claude. Claude wins this round for better error handling (explicit JSON checks, field validation), more structured responses (status, message), and detailed documentation (curl example). ChatGPT’s version is cleaner but lacks robustness.

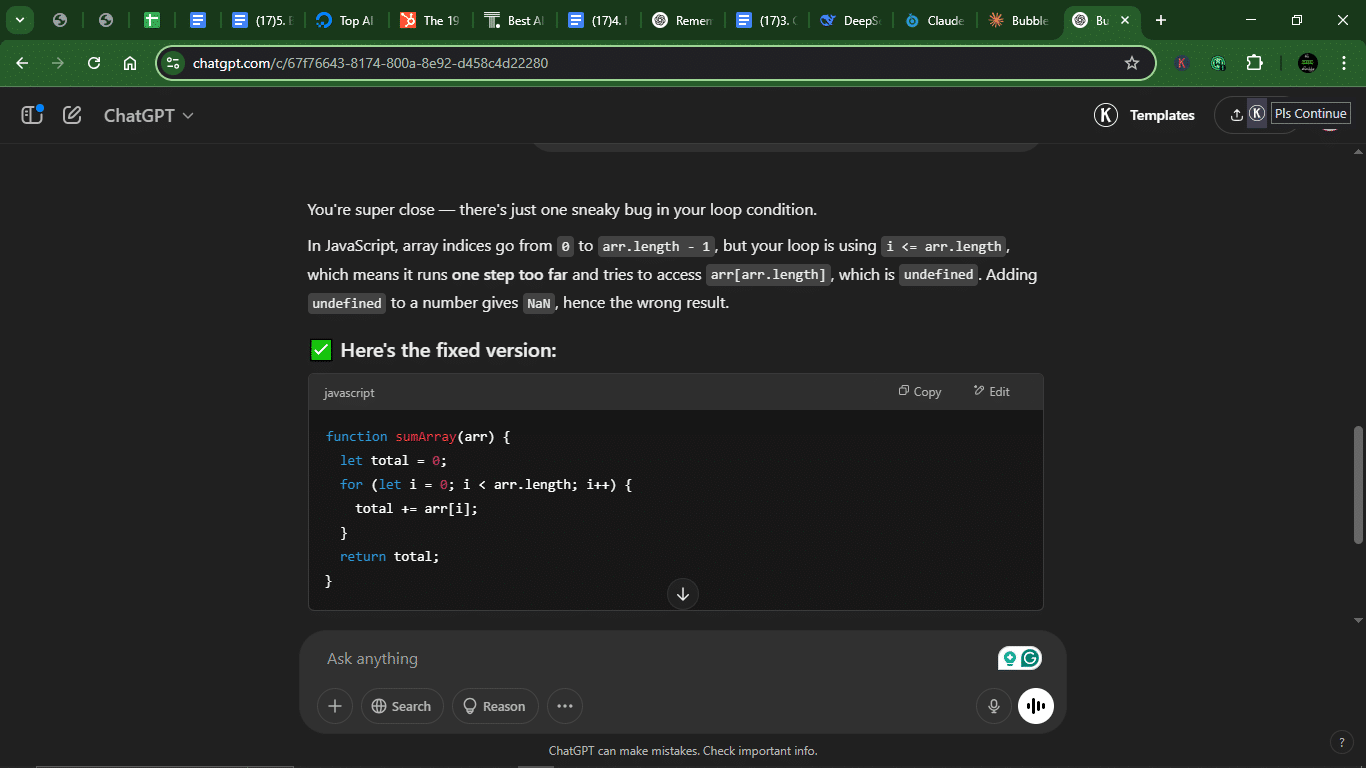

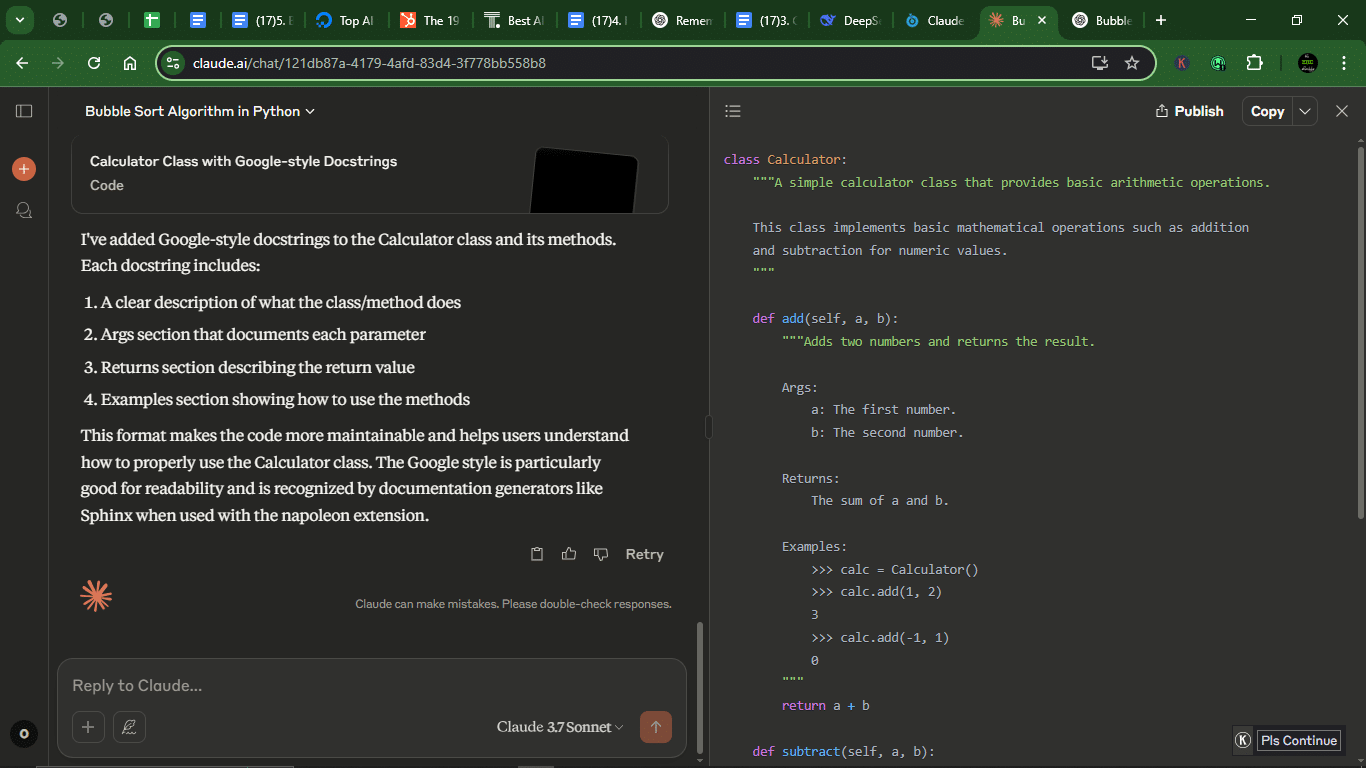

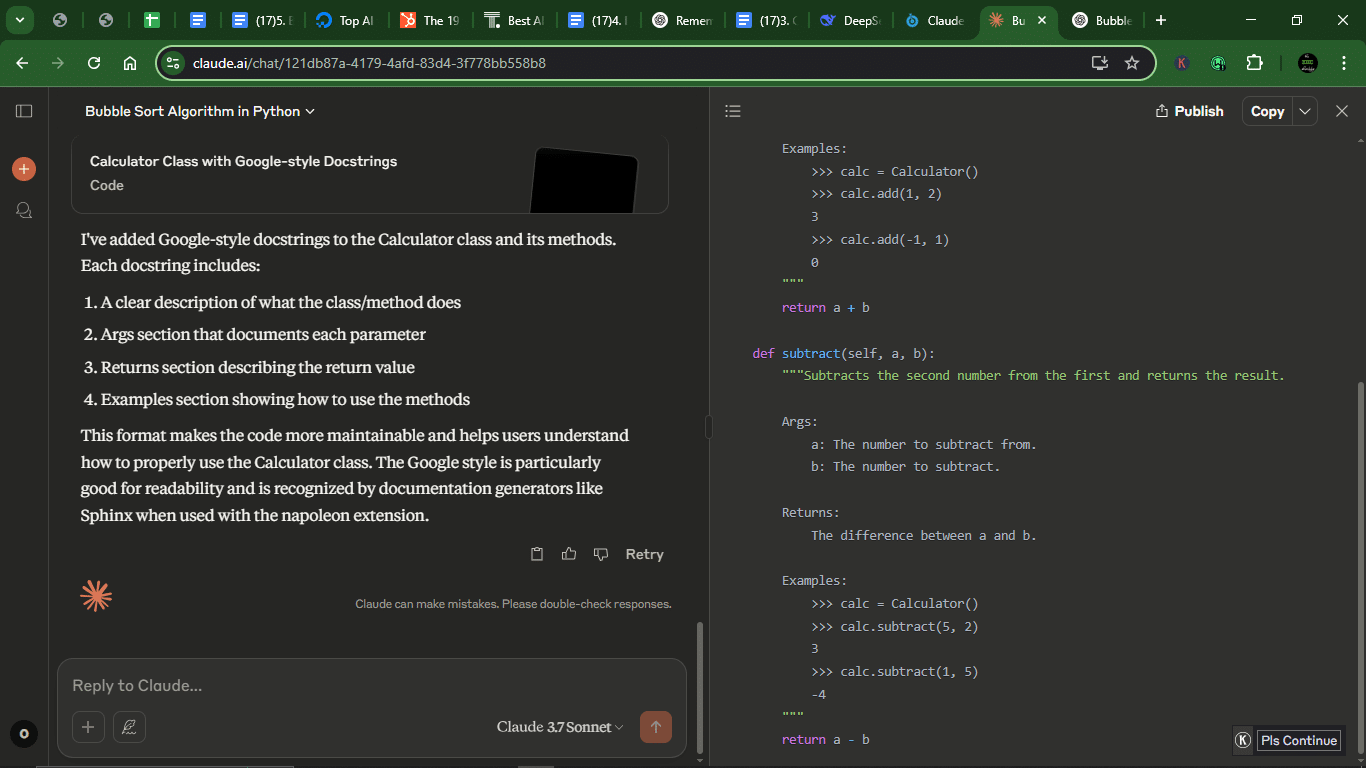

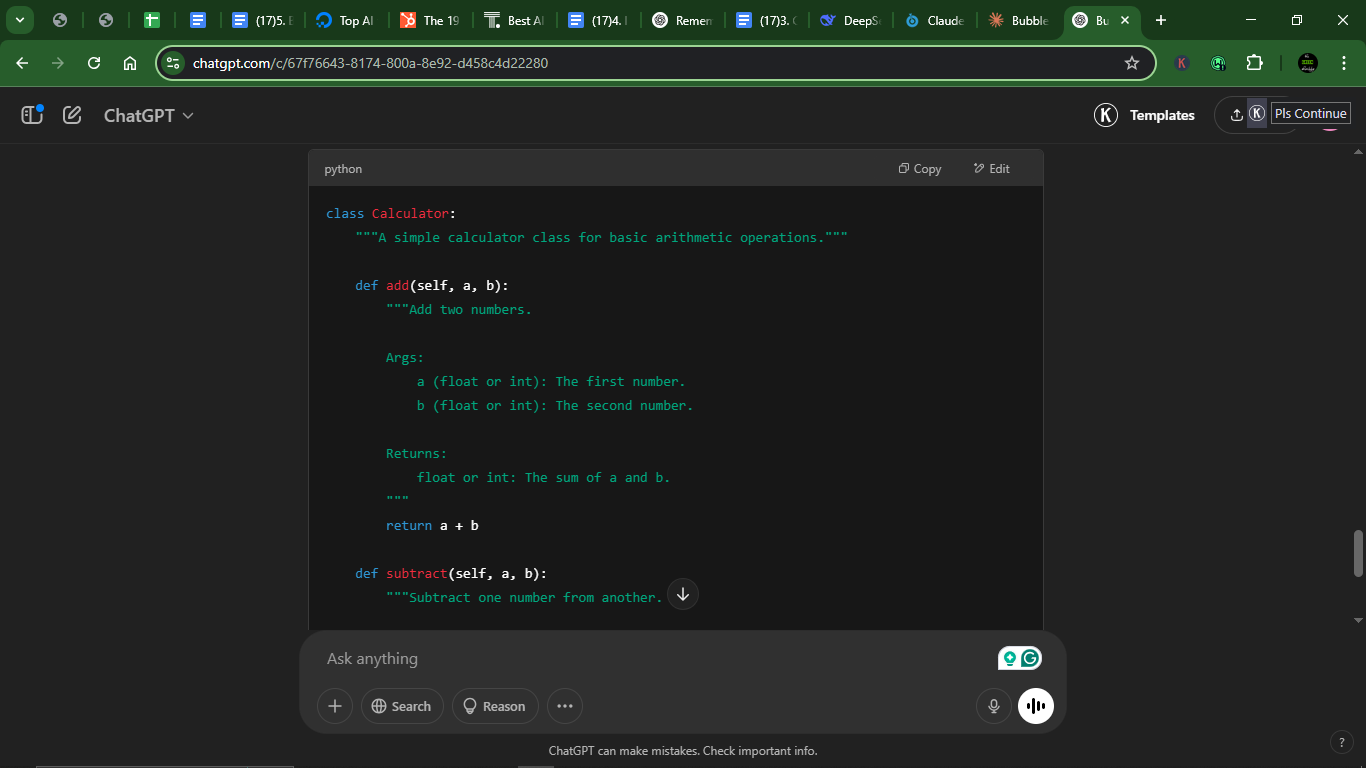

Prompt 4: Generate docstrings for a given Python class

Documentation time. I gave them both a Python class and asked them to write proper docstrings. Neat, readable, and helpful (or bust).

Prompt: “Here’s a Python class. Please add well-structured docstrings for the class and all its methods using Google-style formatting.”

class Calculator:

def add(self, a, b):

return a + b

def subtract(self, a, b):

return a – b ”

Result

Claude:

ChatGPT:

1. Accuracy: Both correctly apply Google-style docstrings to the Calculator class and methods.

2. Clarity:

- Claude:

- More detailed (includes an Examples section in each method).

- The class docstring is more descriptive.

- Verbose but thorough (good for complex projects).

- ChatGPT:

- Cleaner and more concise.

- Specifies argument types (float or int).

- No examples, but still clear.

3. Efficiency: Both docstrings achieve the same goal (documentation). Claude’s examples could be useful for beginners, and ChatGPT’s type hints (float or int) improve clarity.

4. Explanations: Claude explains docstring structure in the intro and includes usage examples (helpful for learning). ChatGPT adds no extra explanation, but docstrings are self-explanatory.

Winner: ChatGPT. ChatGPT wins for conciseness (no unnecessary fluff), explicit type hints (float or int), and Claude is better for educational contexts (examples).

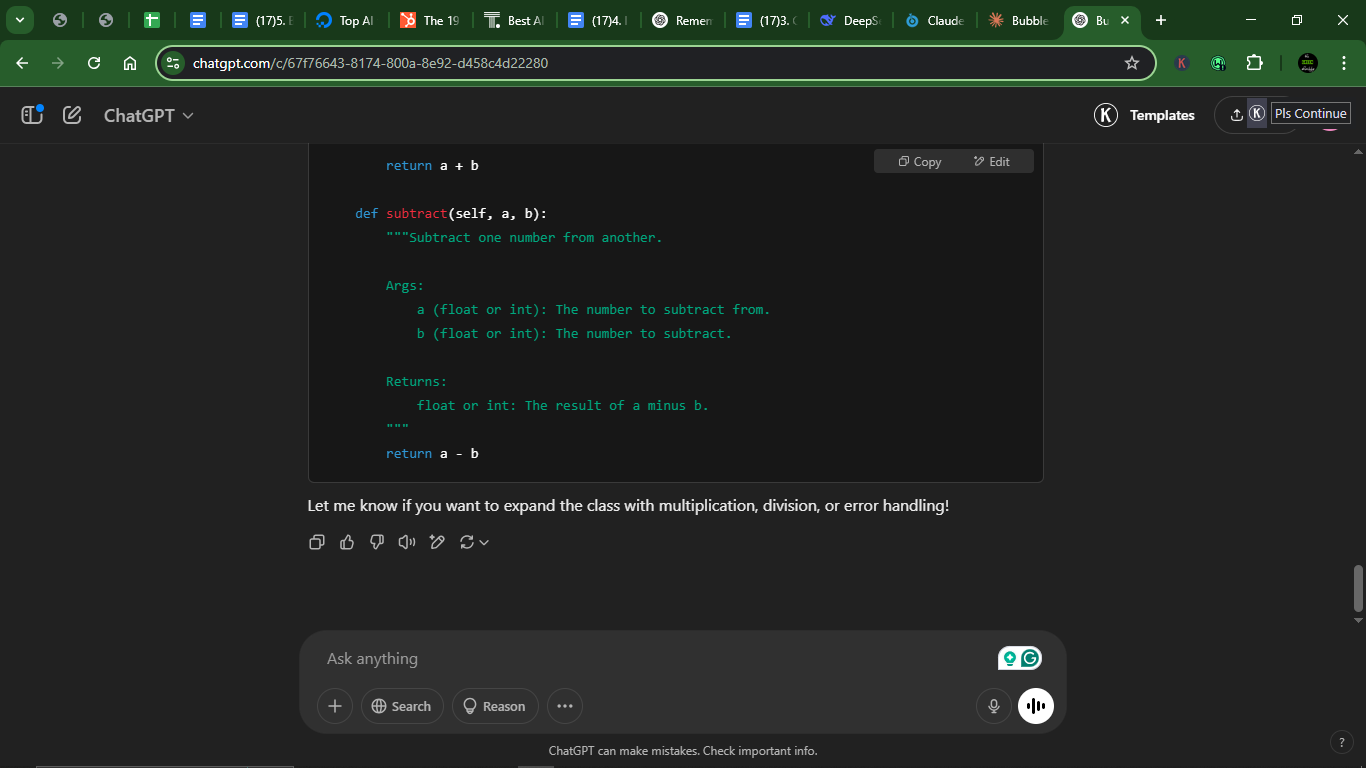

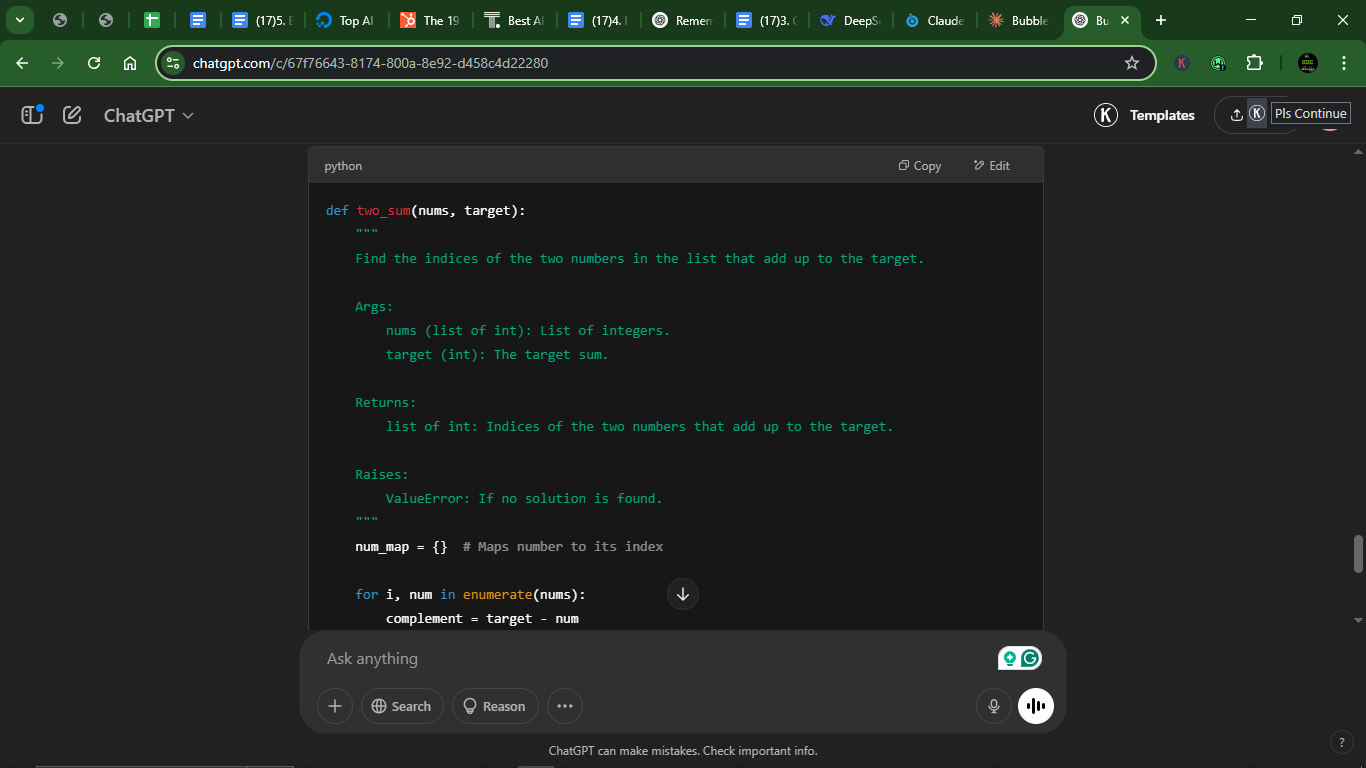

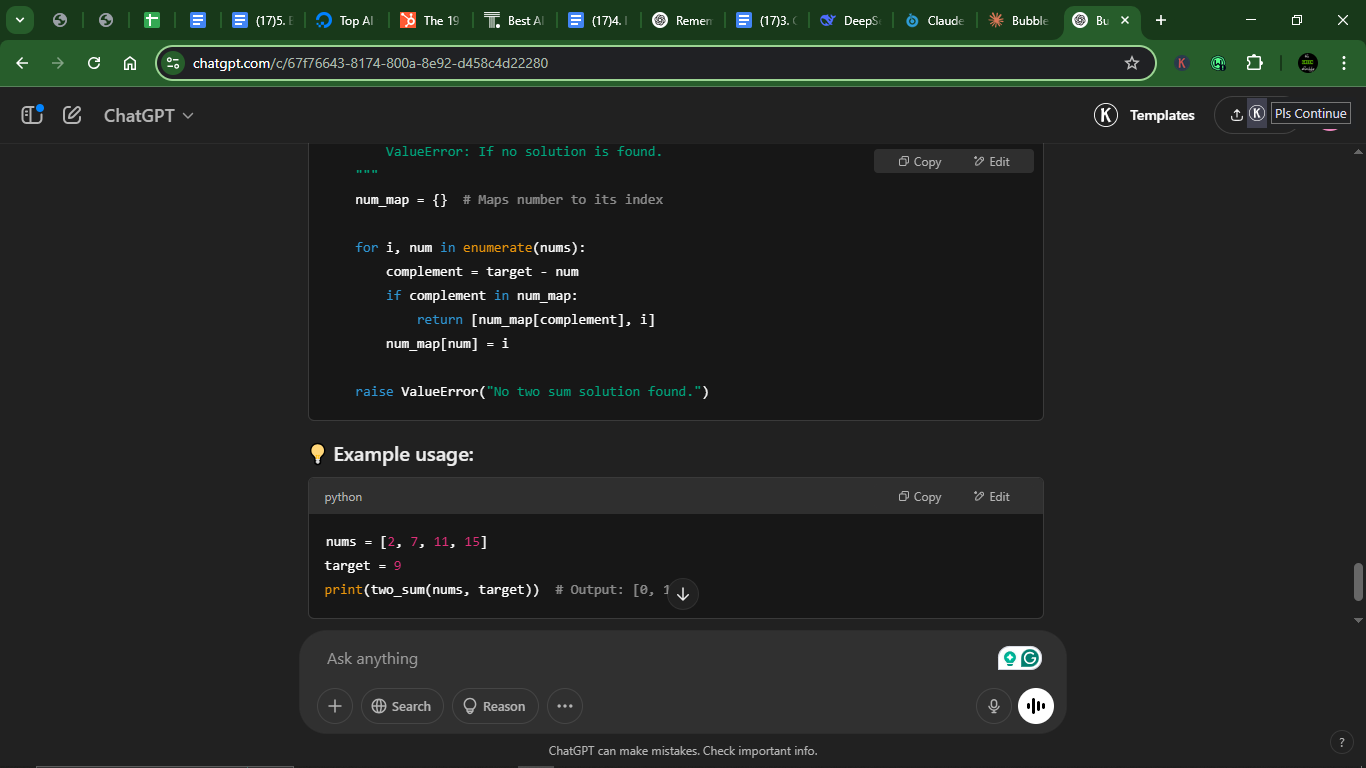

Prompt 5: Solve the “Two Sum” problem (LeetCode-style)

This is basically the “Hello World” of coding challenges. I wanted to see who gave me the faster, smarter solution and didn’t just brute force it.

Prompt: “Write a Python function to solve the Two Sum problem: Given a list of integers and a target number, return the indices of the two numbers that add up to the target.”

Result

Claude:

ChatGPT:

1. Accuracy: Both implementations correctly solve the Two Sum problem using the optimal hash map (O(n)) approach. They handle edge cases (duplicate values, no solution).

2. Clarity:

- Claude:

- More detailed docstring (includes Raises and Returns explicitly).

- Verbose comments inside the function (explains each step).

- Example usage with multiple test cases.

- ChatGPT:

- Cleaner, more concise docstring (still includes all key info).

- No internal comments (the code is simple enough to be self-explanatory).

- Shorter example usage (just one case).

3. Efficiency: Both use the same O(n) algorithm (dictionary-based lookup), giving identical performance in practice.

4. Explanations: Claude gave a detailed explanation before the code (why hash map is optimal), step-by-step comments in the function, and multiple test cases for verification. ChatGPT, on the other hand, gave a brief but clear docstring with no extra explanation, but the code is intuitive.

Winner: Claude. Claude wins for better documentation (detailed docstring + comments), educational value (explains the approach), and including test cases. ChatGPT is cleaner but lacks explanations.

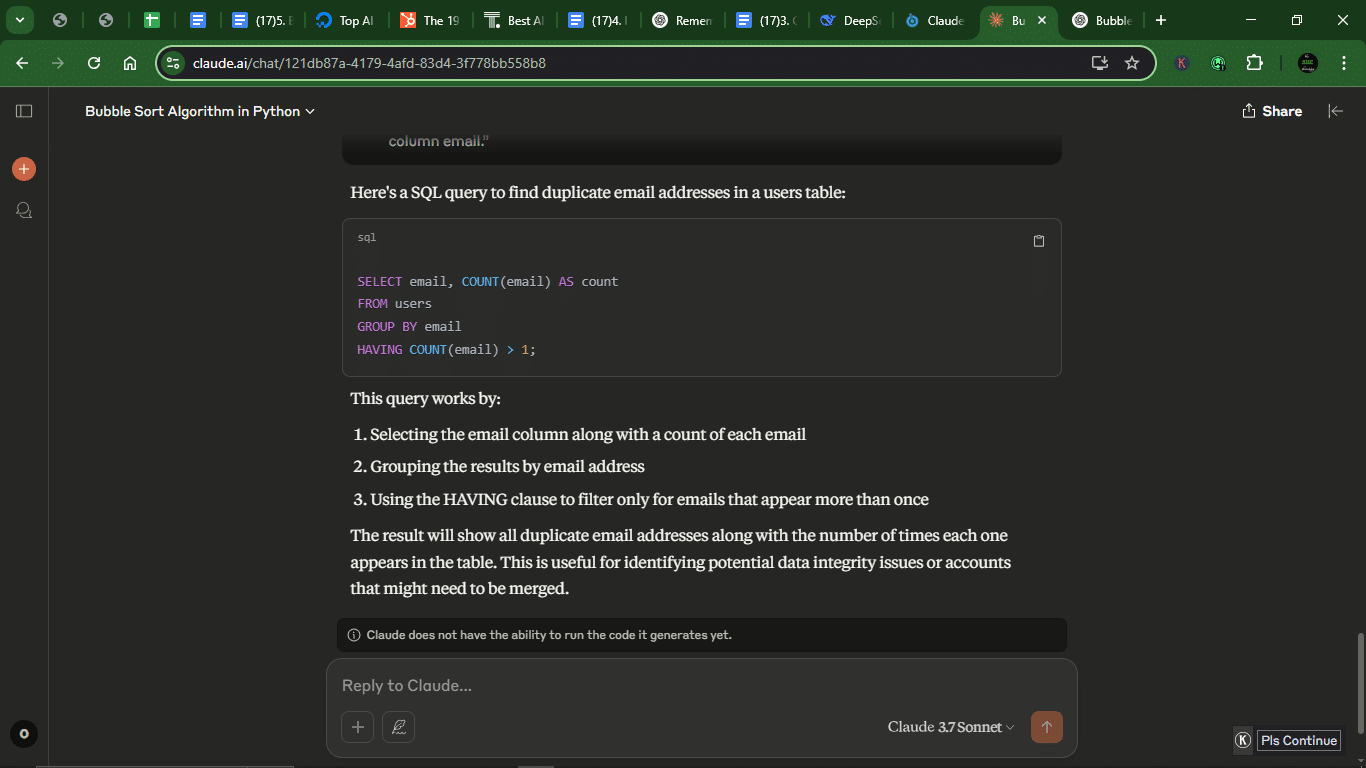

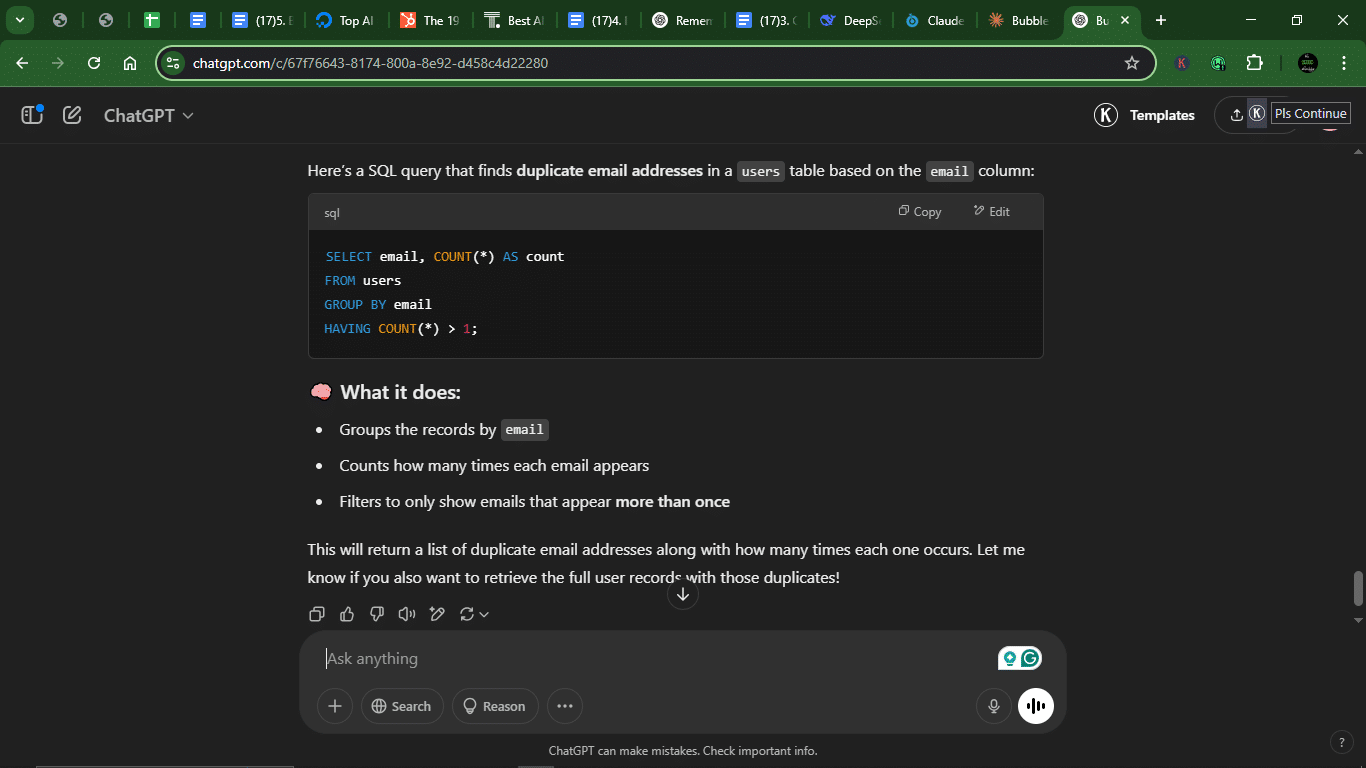

Prompt 6: Write a SQL query to find duplicate emails

This was a straightforward SQL test. The goal was to sniff out duplicate emails using GROUP BY and HAVING.

Prompt: “Write a SQL query to find duplicate email addresses in a users table with a column email.”

Result

Claude:

ChatGPT:

1. Accuracy: Both queries are functionally identical and will correctly find duplicate emails. They equally use GROUP BY email + HAVING COUNT(*) > 1.

2. Clarity: Claude explains the query step-by-step (SELECT, GROUP BY, HAVING) and mentions use cases (data integrity, account merging). ChatGPT provides concise bullet points of what the query does and offers to extend the query (retrieve full user records).

3. Efficiency: Both queries are equally efficient (same execution plan).

4. Explanations: Claude gives a detailed breakdown of each clause and provides practical context (why duplicates matter). ChatGPT has a simpler explanation but covers the essentials and offers to help further (shows flexibility).

Winner: ChatGPT. ChatGPT wins for offering a clearer, more concise explanation, as well as proactively offering to extend the solution. Claude’s explanation is more verbose without added value.

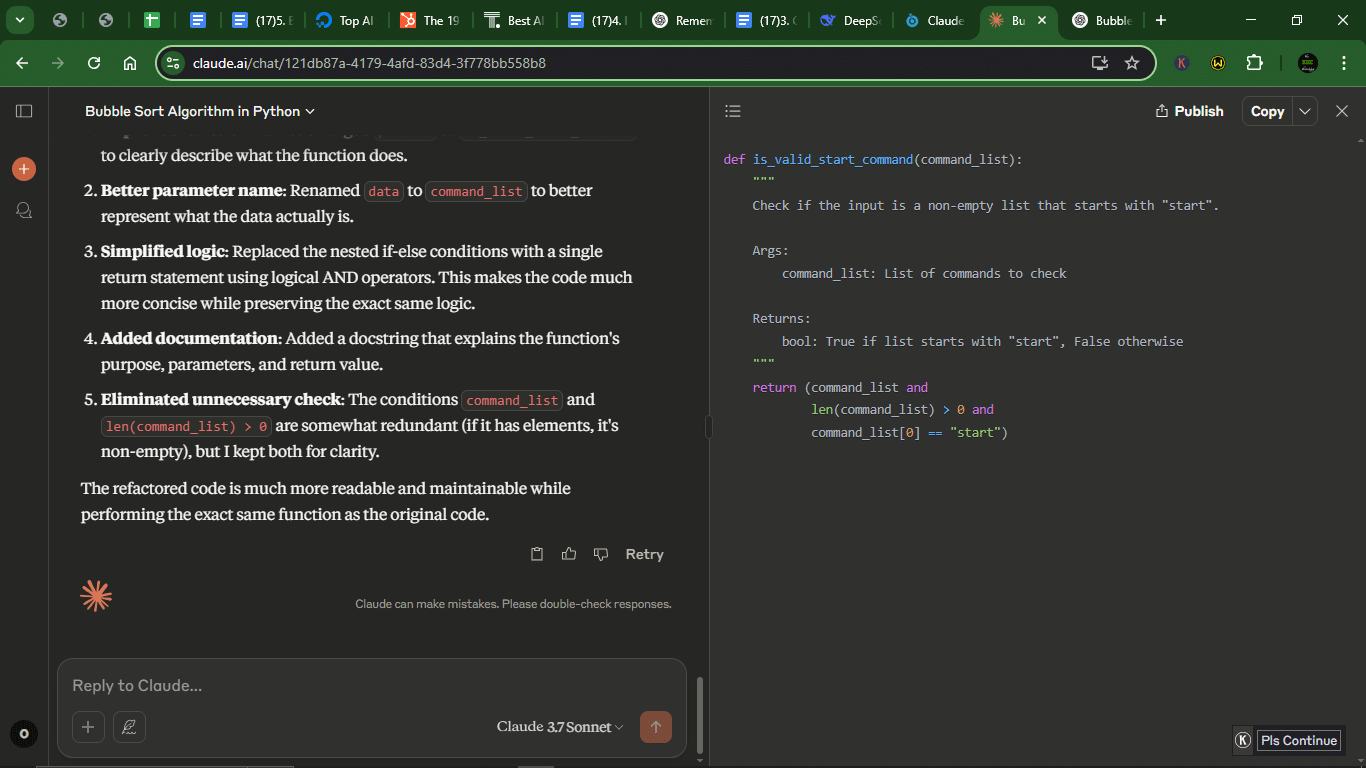

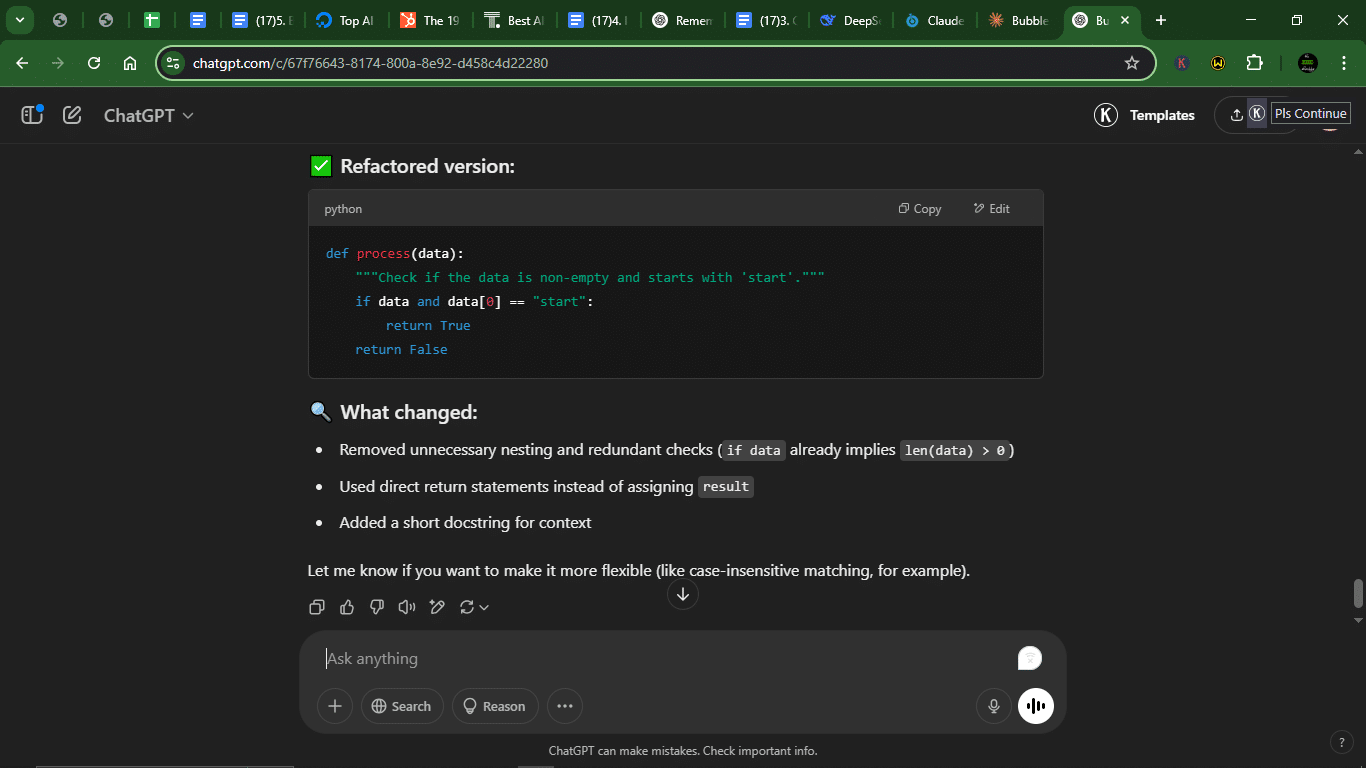

Prompt 7: Refactor code for readability

I gave both AIs a messy Python function loaded with ugly, nested if statements. The mission was to clean it up, rename the chaotic variables, and make it human-friendly.

Prompt: “Refactor this Python code to make it cleaner and easier to read. Focus on simplifying nested conditions and improving variable names.”

def process(data):

if data:

if len(data) > 0:

if data[0] == “start”:

result = True

else:

result = False

else:

result = False

else:

result = False

return result

Result

Claude:

ChatGPT:

1. Accuracy: Claude and ChatGPT’s refactored versions correctly preserve the original logic. Both handle edge cases (None, empty list, non-list inputs).

2. Clarity:

- Claude:

- Renamed function/variables (is_valid_start_command, command_list).

- Added docstring (explains purpose, args, returns).

- Single return with chained conditions (clear but slightly dense).

- ChatGPT:

- Kept the original function name (process), which is less descriptive.

- Simplified logic (if data and data[0] == “start”).

- Shorter docstring (sufficient but minimal).

3. Efficiency: Both versions avoid redundant checks (e.g., len(data) > 0 is unnecessary if data is truthy).

4. Explanations: Claude breaks down changes (naming, logic, docs) in detail and explicitly mentions redundant checks (kept for clarity). ChatGPT provides brief but clear bullet points of changes and doesn’t explain naming improvements.

Winner: Claude. Claude wins for better naming conventions (is_valid_start_command), more complete documentation, and detailed refactoring rationale. ChatGPT’s version is cleaner but lacks improvements to naming/docs.

Prompt 8: Generate a README for a GitHub project

I tossed them both a basic project description and asked for a README file. I was looking for structure, clarity, and maybe even a badge or two.

Prompt: “Generate a professional and friendly README for a GitHub project called ‘WeatherApp’. It’s a simple Python Flask app that shows the current weather based on city input. Include sections like Features, Installation, Usage, and License.”

Result

1. Accuracy: Both READMEs cover all essential sections (Features, Installation, Usage, License). They correctly assume the Flask structure and API key setup.

2. Clarity:

- Claude:

- More formal/professional tone.

- Detailed project structure (full directory tree).

- Longer descriptions (e.g., the Features list is exhaustive).

- ChatGPT:

- More concise and modern (emoji headers, bullet points).

- Simpler project structure (focuses on key files).

- Easier to scan (better for quick onboarding).

3. Efficiency: Both provide clear installation/usage steps. ChatGPT’s emoji headers make sections faster to identify.

4. Explanations: Claude’s response is more verbose (e.g., “Acknowledgements” section), including formal contributing guidelines (PR steps). ChatGPT’s response is brief but has sufficient explanations.

Winner: ChatGPT.

ChatGPT wins for giving a cleaner, more engaging formatting (emoji, bullet points), easier to scan (better for GitHub viewers) response, and more concise answers without losing key info. Claude’s version is more formal but feels over-detailed.

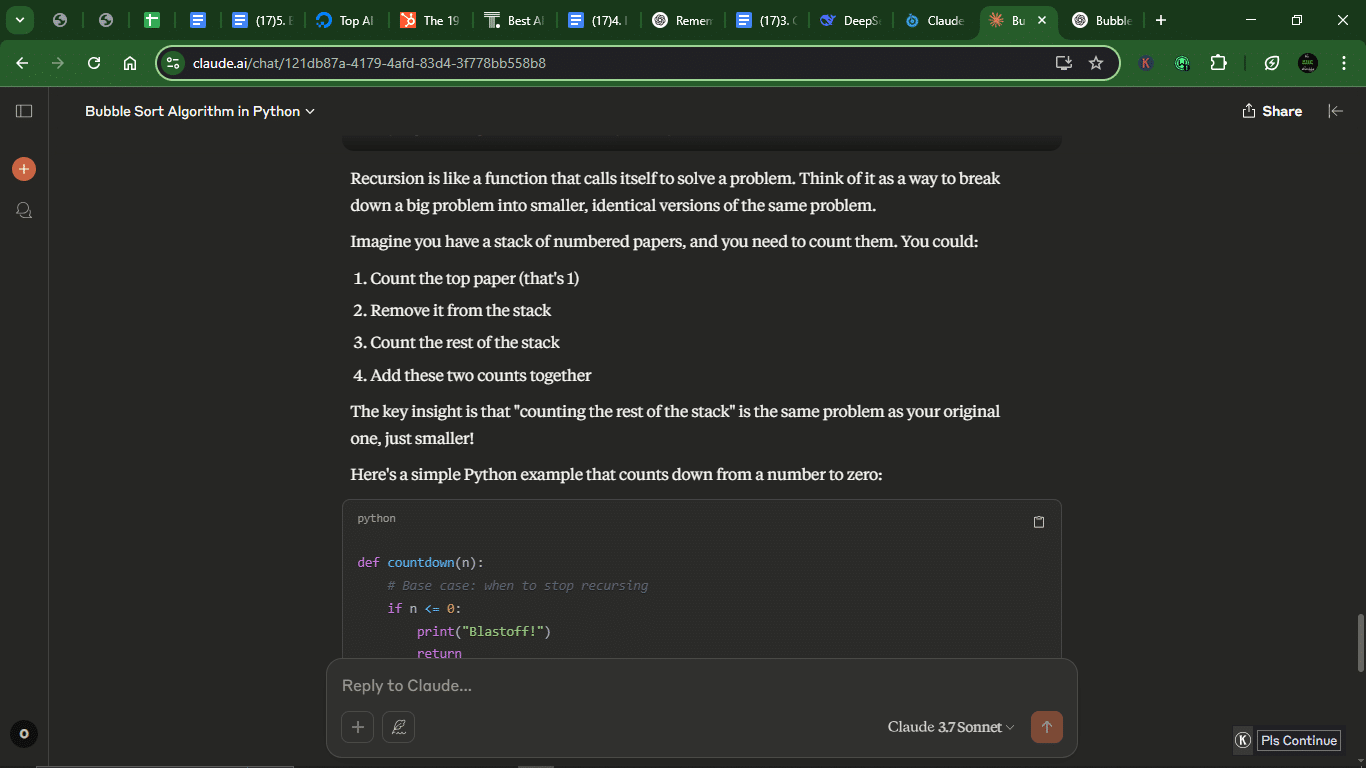

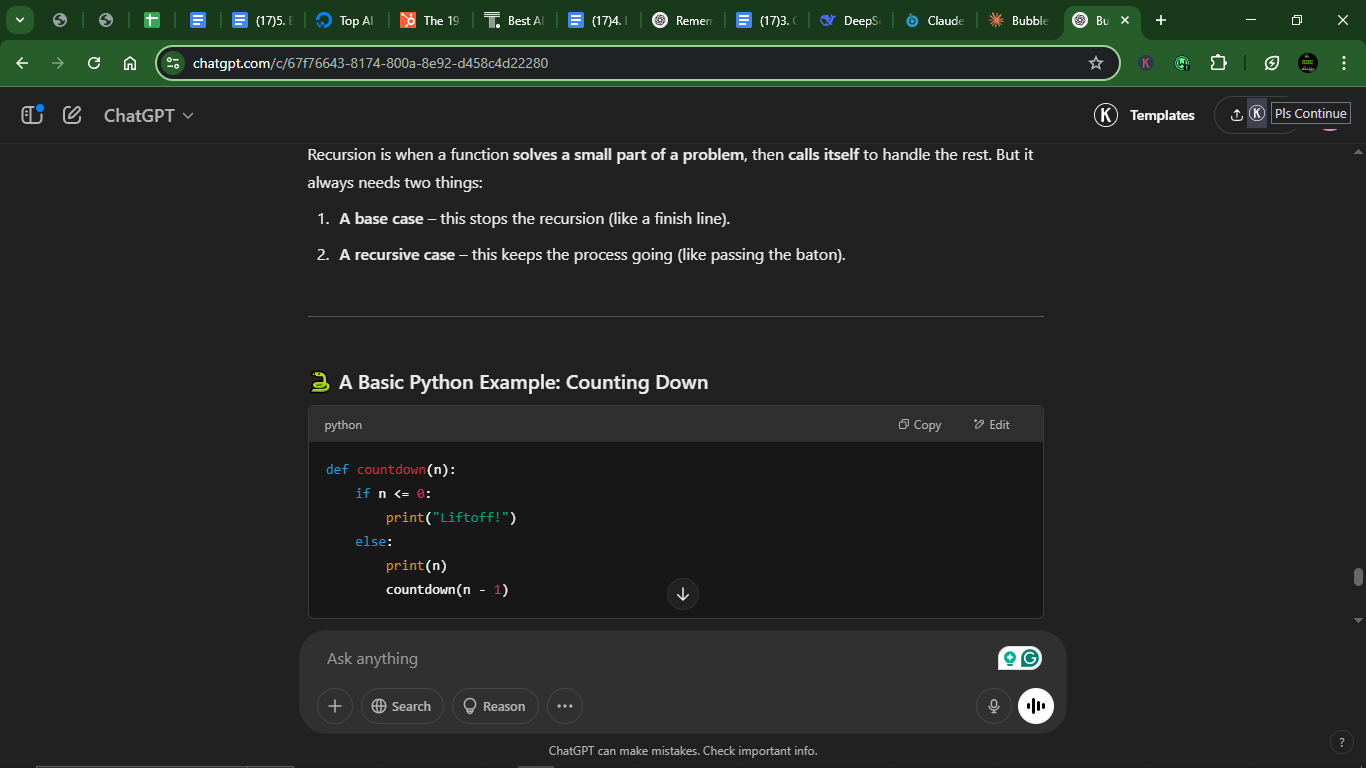

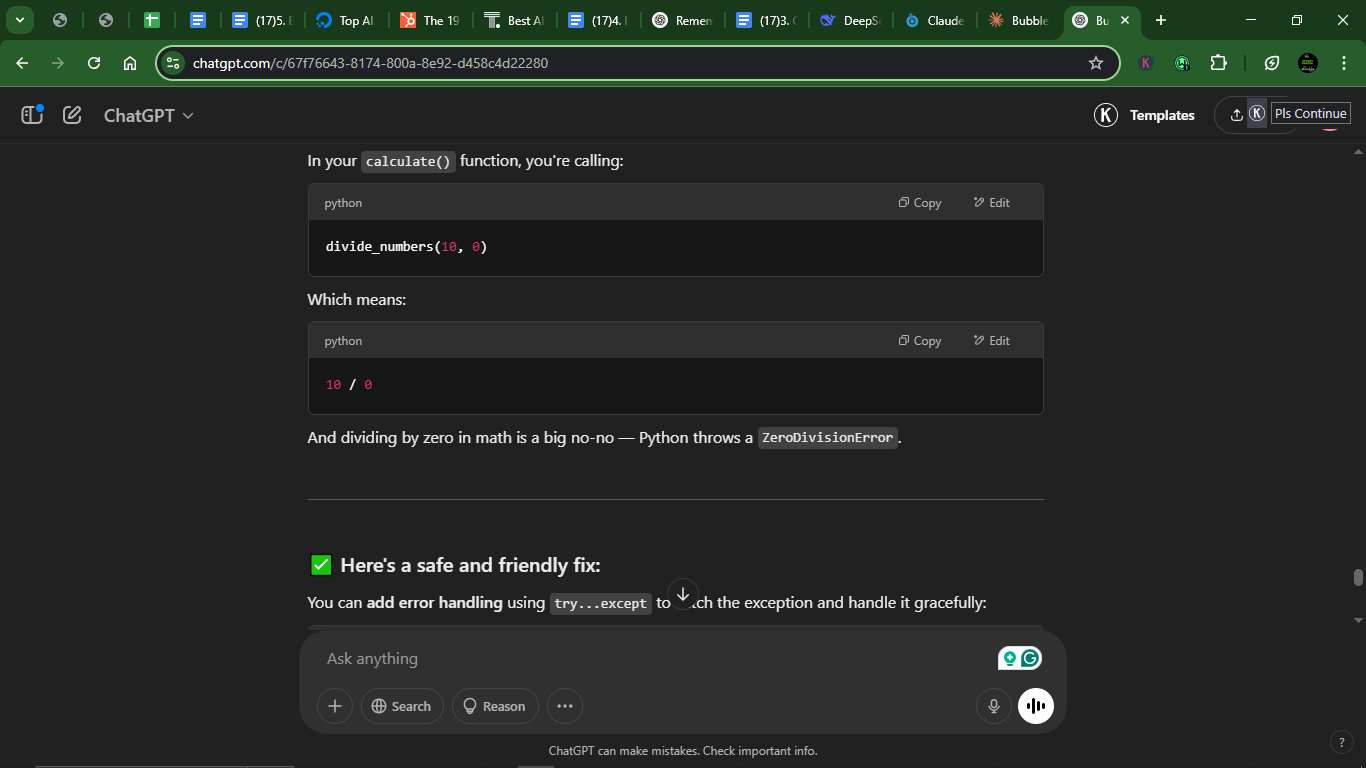

Prompt 9: Explain recursion to a beginner

This is where I tested their teaching chops. I asked them to explain recursion to someone who still confuses a loop with a rollercoaster.

Prompt: “Can you simply explain recursion to someone who’s completely new to programming? Use a basic example in Python.”

Result

Claude:

ChatGPT:

1. Accuracy: Both explanations correctly define recursion with a base case and recursive case, with their Python examples (countdown) working identically.

2. Clarity: Claude uses a paper-stacking analogy (good for visual learners) and explains Russian nesting dolls (reinforces the concept). While it’s more text-heavy, it’s thorough. ChatGPT gives a simpler, more concise explanation. It uses “Liftoff!” instead of “Blastoff!” (minor, but friendlier) and made the step-by-step breakdown of countdown(3) very clear.

3. Efficiency: Both examples are equally efficient (O(n) time).

4. Explanations: Claude’s response contains longer analogies (papers, Russian dolls) and explicitly warns about infinite recursion. ChatGPT gives a clearer step-by-step execution (3 → 2 → 1 → 0) and offers additional examples (factorials, Fibonacci).

Winner: ChatGPT.

ChatGPT wins for having a clearer step-by-step breakdown and a more concise (and modern) tone (e.g., emoji, “Liftoff!”). Claude’s analogies are helpful, but seem over-explained.

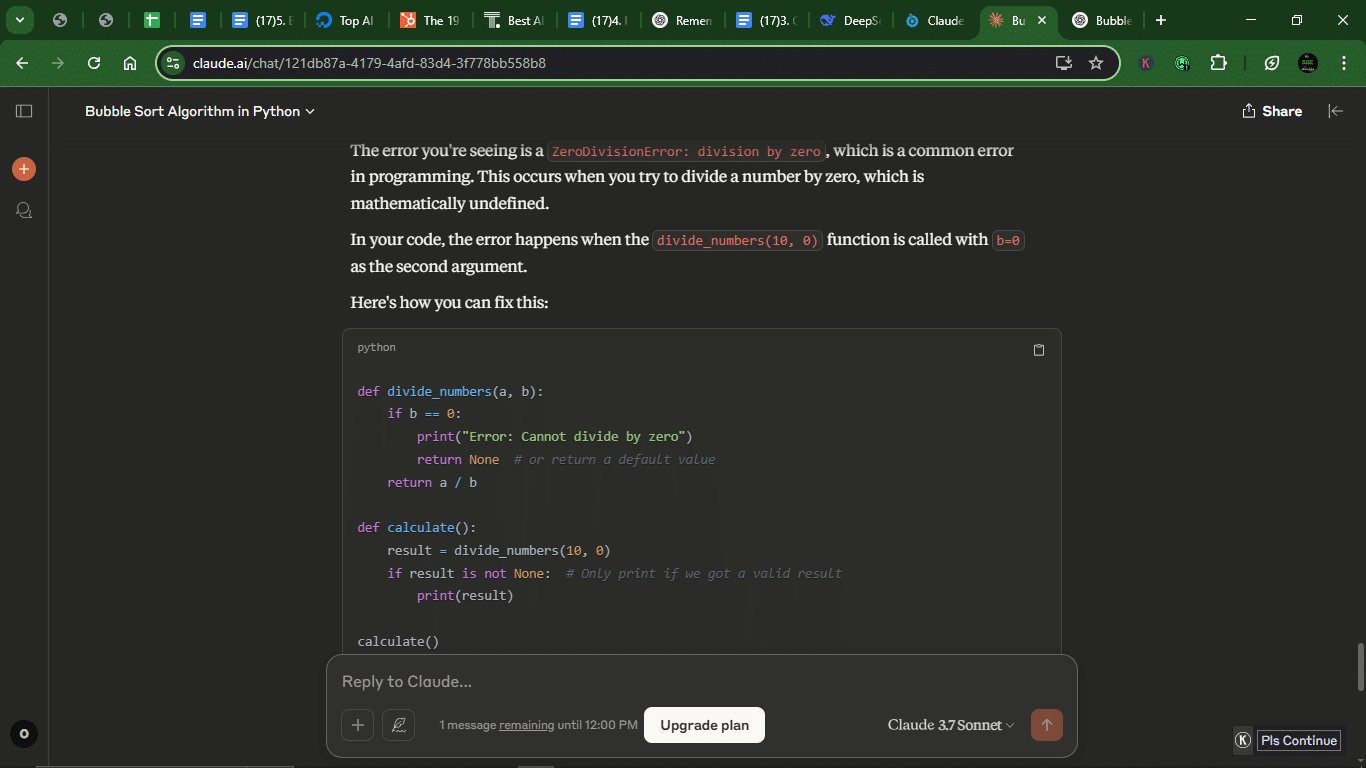

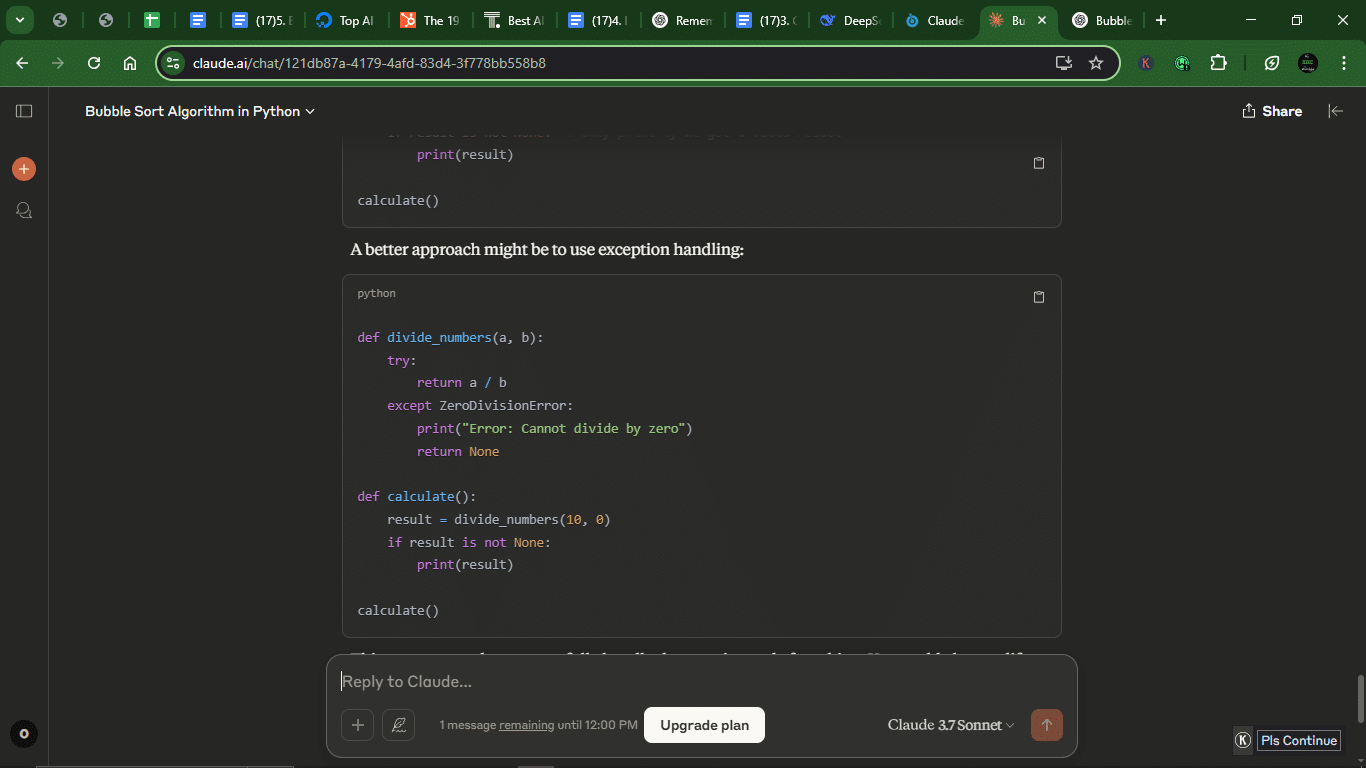

Prompt 10: Debug Python code using a stack trace

I sent over a Python error message, complete with a stack trace. I wanted to see which model could follow the breadcrumbs and fix the issue without going in circles.

Prompt:

“Here’s a Python error and the code that caused it. Can you help me debug it?”

def divide_numbers(a, b):

return a / b

def calculate():

result = divide_numbers(10, 0)

print(result)

calculate()

Error: ZeroDivisionError: division by zero

Result

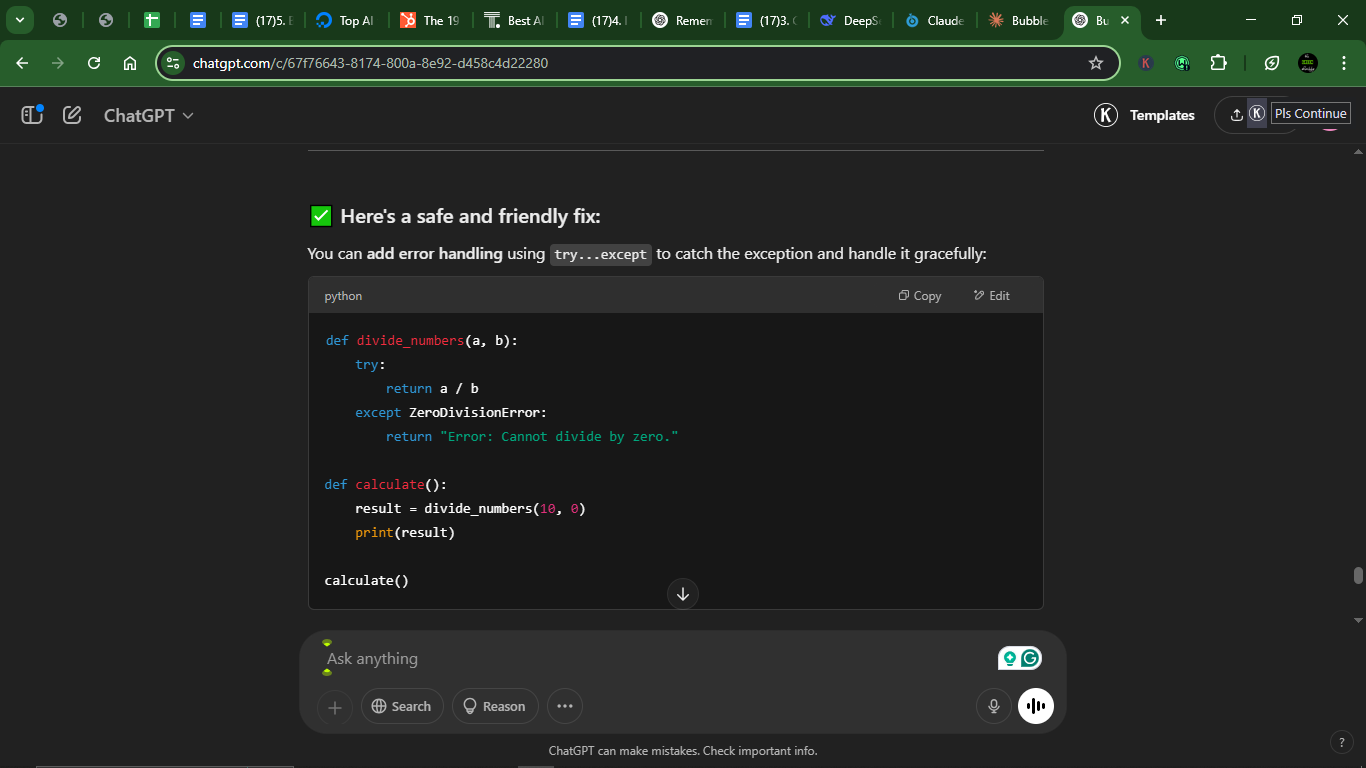

Claude:

ChatGPT:

1. Accuracy: Both correctly identify the ZeroDivisionError and provide working fixes. Also, they equally suggest try-except blocks for graceful handling.

2. Clarity:

- Claude:

- Explains why division by zero is undefined (mathematically).

- Offers two solutions (conditional check + exception handling).

- Suggests additional strategies (user input, custom errors, logging).

- ChatGPT:

- More concise and straight to the point.

- Uses a simpler error message (returns a string directly).

- Fewer options but cleaner for beginners.

3. Efficiency: Both solutions avoid crashing and handle the error. ChatGPT’s version is shorter (returns a string directly), and Claude’s version is more flexible (e.g., return None allows numeric checks later).

4. Debugging skill: Both correctly diagnose the issue. Claude provides multiple approaches (defensive check + exception handling), while ChatGPT focuses on one clean solution.

5. Explanations: Claude gives a detailed rationale (why division by zero fails) and extra tips (logging, user input, custom errors). ChatGPT is brief but clear (it directly addresses the fix) with no extra fluff (good for quick copy-paste).

Winner: Claude.

Claude wins for having comprehensive error-handling strategies, educational value (explains math + multiple approaches), and flexibility (None return vs. string). ChatGPT is cleaner but lacks depth.

Overall performance comparison

Claude and ChatGPT gave equally accurate responses to all 10 tasks.

Claude wins in:

- Teaching concepts (detailed explanations, analogies).

- Debugging depth (multiple strategies, edge-case handling).

- Documentation (READMEs, docstrings).

ChatGPT wins in:

- Readability (concise, modern formatting).

- Quick fixes (straight to the point).

- Beginner-friendly (simpler examples).

It’s a 5-5 tie in my 10-task battle. Use Claude for learning, debugging, and robustness, and deploy ChatGPT for clean code, quick solutions, and readability.

Let’s break down the comparison visually:

| Criteria | Claude | ChatGPT | Winner |

| Accuracy | Correct solutions, robust error handling | Correct solutions, concise fixes | Tie |

| Clarity | Detailed explanations, thorough documentation | Cleaner, more readable code | ChatGPT (simplicity) |

| Efficiency | Optimal algorithms, but verbose | Same efficiency, more concise | Tie |

| Debugging skill | Deep dives, multiple approaches | Quick fixes, straight to the point | Claude (depth) |

| Explanations | Teaches concepts and analogies | Brief but actionable | Claude (teaching) |

| Best for | Learning, complex debugging, and docs | Quick solutions, clean code | Depends on need |

Why do Google Claude and ChatGPT matter to coders?

As a coder (or someone who dabbles in coding, like me), you know that efficiency and accuracy are key. When you’re under tight deadlines, or even when you’re just trying to get a project across the finish line, you need tools that don’t just talk big but walk the walk. That’s why Claude and ChatGPT are so handy.

Why should you care about them as a coder?

Let me break it down:

1. Faster development

Both Claude and ChatGPT can significantly speed up your workflow. Instead of manually writing every single line of code or hunting down bugs for hours, these AIs can generate code on demand, identify issues, and propose fixes. If you’re running behind your deadline, having a reliable AI assistant like these two can shave hours off repetitive tasks.

2. Increased productivity

AI tools like Claude and ChatGPT aren’t here to replace you. Rather, they are here to enhance your abilities. Whether it’s generating boilerplate code, solving algorithmic problems, or automating documentation, both models allow you to focus on the more creative or complex aspects of coding. This is where you, as a developer, can truly shine while leaving the grunt work to the AI.

3. Error reduction and debugging

One of the most frustrating aspects of coding is debugging. Even seasoned coders get stuck in the weeds sometimes. This is where both Claude and ChatGPT can make a huge impact. They can identify errors in your code, explain why things aren’t working, and suggest changes. It’s like having a second pair of eyes, except these eyes never get tired or miss a detail.

4. Learning and skill enhancement

For those who are still learning to code, Claude and ChatGPT offer an excellent way to speed up the learning process. They can help explain difficult concepts, provide examples, and give feedback on your work. Think of them as your own personal tutor, available 24/7.

5. Documentation and communication

Documentation is often the last thing on a developer’s to-do list, but it’s critical. Claude, in particular, is fantastic for writing clear, concise, and structured documentation. Whether it’s generating docstrings, README files, or API documentation, these models make your life easier by taking care of the written explanations while you focus on the code itself.

6. Collaborative potential

Claude and ChatGPT are also great for teamwork. Whether you’re working on a large codebase or collaborating with a remote team, you can use these tools to help explain complex code or even review pull requests. It’s like having a constant brainstorming partner who’s always there to contribute ideas, fix errors, and keep things running smoothly.

Pricing for Claude and ChatGPT

When it comes to pricing, both Claude and ChatGPT offer flexible options, though their cost structures and features vary. Here’s a breakdown of their pricing tiers:

ChatGPT pricing

| Plan | Features | Cost |

| Free | Access to GPT-4o mini, real-time web search, limited access to GPT-4o and o3-mini, limited file uploads, data analysis, image generation, voice mode, Custom GPTs | $0/month |

| Plus | Everything in Free, plus: Extended messaging limits, advanced file uploads, data analysis, image generation, voice modes (video/screen sharing), access to o3‑mini, custom GPT creation | $20/month |

| Pro | Everything in Plus, plus: Unlimited access to reasoning models (including GPT-4o), advanced voice features, research previews, high-performance tasks, access to Sora video generation, and Operator (U.S. only) | $200/month |

Claude pricing

| Plan | Features | Cost |

| Free | Access to the latest Claude model, use on web, iOS, and Android, ask about images and documents | $0/month |

| Pro | Everything in Free, plus more usage, organized chats and documents with Projects, access to additional Claude models (Claude 3.7 Sonnet), and early access to new features | $18/month (yearly) or $20/month (monthly) |

| Team | Everything in Pro, plus more usage, centralized billing, early access to collaboration features, and a minimum of five users | $25/user/month (yearly) or $30/user/month (monthly) |

| Enterprise | Everything in Team, plus: Expanded context window, SSO, domain capture, role-based access, fine-grained permissioning, SCIM for cross-domain identity management, and audit logs | Custom pricing |

Conclusion

AI has evolved from a buzzword to an essential tool for developers, with Claude and ChatGPT leading the charge. After rigorously testing both across 10 coding tasks, the results were a dead-even tie, showing that neither is universally superior. Each excelled in distinct ways:

ChatGPT is your quick, versatile ally, excelling in rapid prototyping, concise code, modern formatting (e.g., emoji-filled READMEs), and beginner-friendly explanations, and it’s best for daily coding when speed and readability matter.

Claude is your thoughtful, detail-oriented partner, perfect for in-depth debugging (e.g., multiple solutions for ZeroDivisionError), educational value (analogies like Russian dolls for recursion), and robust documentation. Its strength lies in breaking down complex concepts or writing maintainable, well-documented code.

Personal preference matters. Try both: Our 5-5 tie proves that neither is universally better; your workflow will decide.

FAQs for Claude vs ChatGPT for coding

Which AI is better for generating code, Claude or ChatGPT?

Both Claude and ChatGPT excel at code generation, but their strengths lie in different areas. Claude excels in robustness, error handling, and teaching value (e.g., detailed docstrings, thorough debugging). ChatGPT is particularly strong in producing concise, easily readable, and context-aware code. It’s also proficient for quick solutions (e.g., cleaner code, modern formatting).

Can these AIs debug my code effectively?

Yes, both Claude and ChatGPT can debug code, but differently. Claude provides deep dives (multiple approaches, edge-case handling), and ChatGPT offers faster fixes with minimal explanation.

Do I need to be a professional coder to use these AI models?

Not at all. You don’t need to be a seasoned coder to benefit from Claude or ChatGPT. These AIs are designed to assist coders of all skill levels, from beginners to experts. However, to play to their strength, Claude is better for beginners (detailed analogies, step-by-step breakdowns), while ChatGPT is great for experienced devs who want concise answers.

How accurate are Claude and ChatGPT at writing documentation?

Both models are excellent at generating documentation, but Claude tends to have a slight edge in this area as it can generate detailed and well-structured docstrings, README files, and API documentation. ChatGPT is perfect for those who want clarity, precision, and scanability.

Can these AIs handle multiple programming languages?

Yes, both Claude and ChatGPT can work with multiple programming languages, including Python, JavaScript, Java, C++, and more.

Do Claude and ChatGPT have live internet access for real-time updates?

ChatGPT can access live data from the internet, which is useful for real-time research and solving issues that require up-to-date information. However, this access mostly sacrifices depth. Claude, on the other hand, doesn’t have live internet access. This means Claude operates solely based on its trained data, which can be an advantage in ensuring privacy and maintaining a consistent response based on known facts.

Are there any notable limitations to using these AI models for coding?

Both Claude and ChatGPT have their limitations. For instance, they both lack human intuition when solving novel or complex problems. Claude can’t access real-time Internet access and sometimes over-explains (e.g., nested conditionals refactor). ChatGPT may oversimplify (e.g., skipped edge-case checks in Flask API), which makes it lack depth.

Which AI is more cost-effective for coding tasks?

Both Claude and ChatGPT offer free versions and subscription plans with pricing that varies depending on the level of access and features. Overall, Claude seems more affordable.

Disclaimer!

This publication, review, or article (“Content”) is based on our independent evaluation and is subjective, reflecting our opinions, which may differ from others’ perspectives or experiences. We do not guarantee the accuracy or completeness of the Content and disclaim responsibility for any errors or omissions it may contain.

The information provided is not investment advice and should not be treated as such, as products or services may change after publication. By engaging with our Content, you acknowledge its subjective nature and agree not to hold us liable for any losses or damages arising from your reliance on the information provided.

Always conduct your research and consult professionals where necessary.